Why Product Recommendations Don’t Convert (and What to Do Instead)

Most product recommendations miss intent. Learn the real reasons they fail and the simple shifts that make shoppers click, explore, and buy.

Every ecommerce platform claims to have “AI-powered recommendations.” Yet open any brand site and you’ll see the same carousels: “You might also like”, or “Recommended for you.” Different names, but the same guesswork.

The irony? Shoppers don’t ignore those widgets because they’re blind to them. They ignore them because they’re irrelevant. Most systems are built to chase patterns, and not to read what’s happening in front of them.

They look at catalog tags, purchase history, and “people who bought this also bought that” data, as if shopping were a static equation. But it isn’t.

Behavior changes by the minute. The same person could browse workwear in the morning and party edits at night. When your recommendation engine doesn’t notice that shift, it’s already out of sync.

In this blog, we’ll break down why most recommendation systems fail, what modern discovery should look like, and how to build contextual recommendations that actually drive decisions.

Why most recommendation engines fail

Most recommendation engines still run on an idea that made sense ten years ago, that shoppers who act alike want the same things. It’s the “people who bought this also bought that” logic every ecommerce platform inherited from Amazon. It worked when behavior was predictable. It doesn’t anymore.

Here are some common reasons why most static product recommendation engines don’t lead to conversions:

Problem 1: Static rules can’t keep up with live behavior

Static recommendation engines still depend on catalog tags, purchase history, and broad category overlaps. They assume that what a shopper wanted once will stay true forever.

However, intent shifts constantly. The same visitor might browse workwear during a weekday lunch break and festive styles that night. Someone shopping for themselves in the morning could be buying a gift by evening.

But a lot of the systems treat every action as if it came from the same person, in the same state of mind. They can’t see that context has changed, so they keep repeating yesterday’s assumptions.

Problem 2: Behavior without context means nothing

Every click, scroll, and dwell time gets treated as equal, but it’s the order and speed that reveal intent. For example, a visitor jumping through three moisturizers in ten seconds isn’t engaged; they’re undecided. Similarly, another visitor who lingers on one PDP and checks reviews is closer to purchase.

A good salesperson never recommends based on who you are; they respond to what you’re doing right now. If you’re comparing two serums, they’ll talk about texture and results. If you’re exploring a new category, they’ll show you what fits your need, not your profile.

That’s what digital recommendation engines should do: notice what’s happening in the moment and adjust.

Problem 3: More recommendations don’t mean better discovery

Traditional recommendation systems measure success by how many items they can suggest. But shoppers don’t need twenty similar products; they need two that make sense.

When you flood a page with carousels and “you might also like” lists, you’re not guiding the shopper, but giving them more work. Real personalization simplifies the decision; it doesn’t multiply the options.

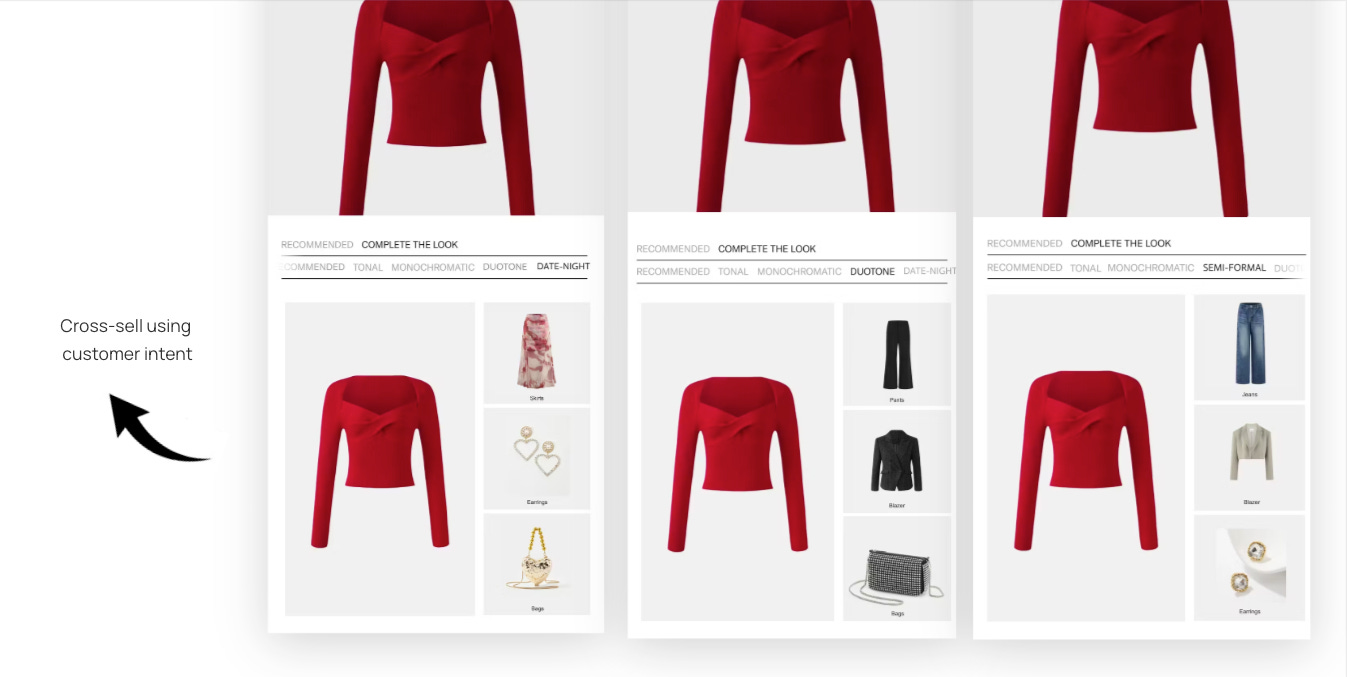

Lookalike logic reduces personalization to pattern-matching. Intent-based logic treats every session as a fresh signal. One copies behavior; the other interprets it. The difference shows up in how the shopper feels: one makes them scroll more, the other helps them decide faster.

The real job of recommendations: help narrow choices, not add more

Most recommendation systems treat discovery like a numbers game: the more options shown, the better. But shoppers don’t want more. They want direction.

The job of a good recommendation engine is to help people decide, not browse longer. When a visitor lands on your site, they’re not asking for every possible variant. They’re looking for a small set of confident choices.

The “two-choice” rule

For most categories, two to four relevant options are enough. It’s the digital equivalent of a salesperson placing two products on the counter and saying, “Try these, they fit what you’re looking for.”

Let’s take the example of someone exploring denim: they don’t need thirty near-identical jeans. They need two pairs that make sense: one that matches their browsing behavior, and one that smartly contrasts it, maybe a different fit or price range. That contrast builds confidence.

What to do instead: Build contextual discovery, not static widgets

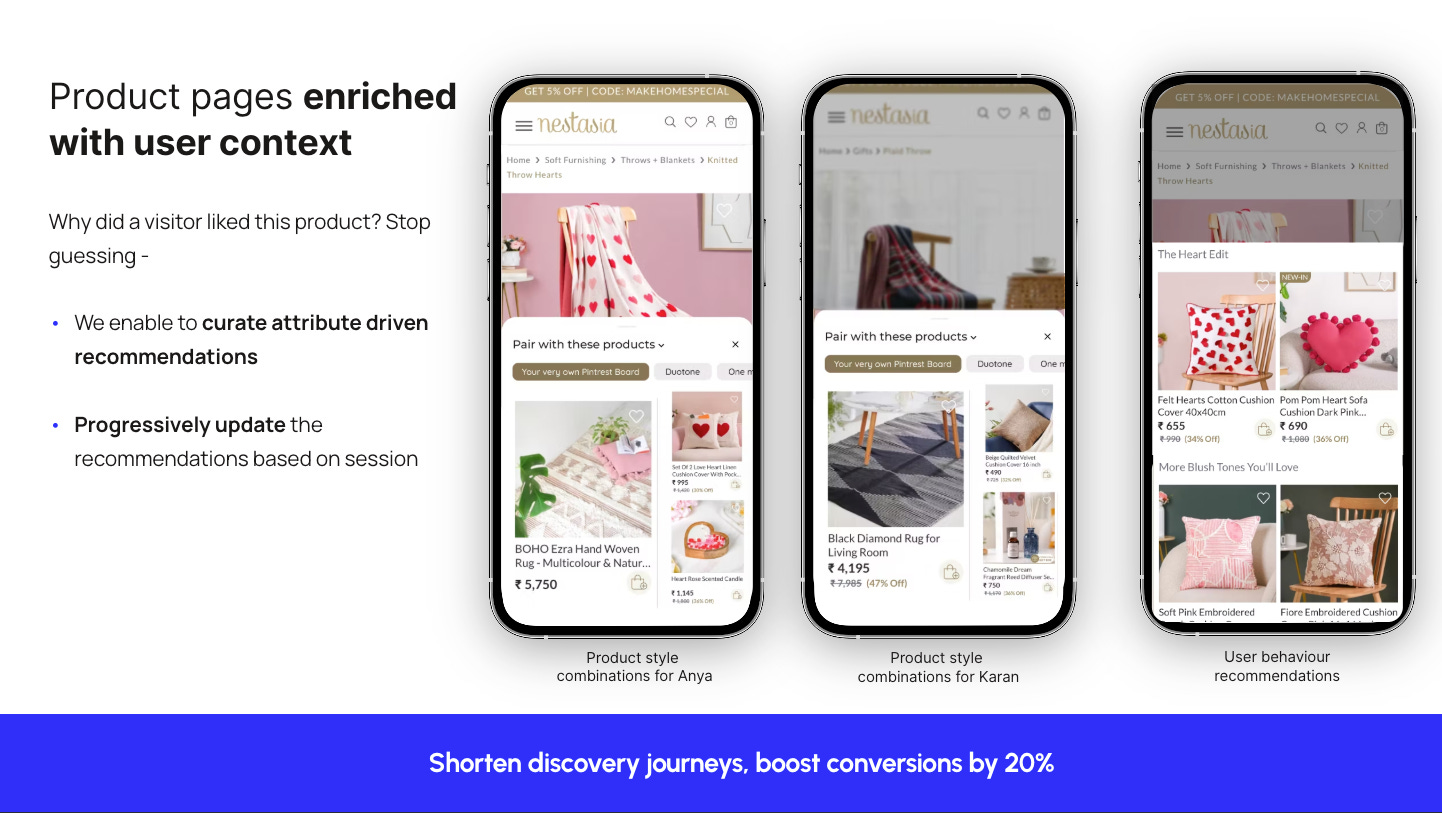

Static recommendation widgets are built to guess. They surface “related items” because that’s all they know how to do. The problem is that these systems assume behavior is linear when it’s actually fluid.

Contextual discovery works differently. It doesn’t predict based on the past; it interprets what’s happening right now. It reads micro-signals, adjusts in-session, and refines after every interaction.

Here’s how you build that kind of intelligence into your store, step by step.

Step 1: Use live session signals

Most brands track clicks, scrolls, and time on site, but those signals mean nothing unless they’re read together. You need to capture micro-behaviors that reveal the state of intent, not just activity.

Start by mapping three layers of input:

Depth: how far someone scrolls, and where they slow down.

Engagement: what they hover on, zoom into, or compare.

Decision cues: if they open reviews, switch variants, or check delivery time.

Then, label every session with a live intent state: browsing, evaluating, or deciding. Use this classification to drive your logic:

Browsing → show lightweight, varied SKUs to help them explore.

Evaluating → push proof-based cues (top-rated, ingredient-focused, verified).

Deciding → simplify choices, bundle complementary SKUs, or surface urgency offers.

Step 2: Adapt within the same session

Recommendation systems treat learning as a post-event task. By the time your engine updates, the visitor has already left. You need to build rules that adapt mid-session:

If someone filters by “under ₹999” and scrolls fast, re-rank products in that price band and drop premium SKUs out of view.

If they zoom in on bright colors, re-order the grid with similar palettes for continuity.

If they check the delivery section twice, prioritize SKUs that are in stock and ship faster to their location.

If they scroll back up the page, surface a “recently viewed” block to anchor them again.

This is how you make your recommendations feel intelligent: by responding faster than shoppers expect.

Step 3: Design for clarity, not clutter

Your store doesn’t need more recommendations; it needs smarter ones. Set a hard rule: no more than 2–4 visible suggestions per zone. But each slot must serve a role:

Reinforce: the most contextually aligned option (similar tone, use case, or ingredient).

Contrast: a smart alternative (different fit, higher margin, or better-rated).

Advance: a next-step add-on that fits their current goal.

Use contextual cues to guide, not shout. Replace lazy headers like “You may also like” with lines that mirror intent:

“Popular in your city”

“Better suited for oily skin”

“Pairs well with what you viewed”

That single shift of relevance over repetition drives clarity without noise.

Step 4: Close the loop with data

Don’t just log clicks; instead, earn from them. Map your recommendation performance by zone, not SKU. Ask:

Which section gets engagement: PDP carousel, homepage block, or cart cross-sell?

What framing works better: trust-based (“top rated”) or contextual (“recently viewed”)?

Do intent-specific widgets convert faster than generic ones?

Feed these learnings back into your session logic weekly. For example, if your “exploration” block drives time-on-site but not conversion, that’s fine; it’s doing its job upstream. On the other hand, if your “decision” block is ignored, refine what triggers it.

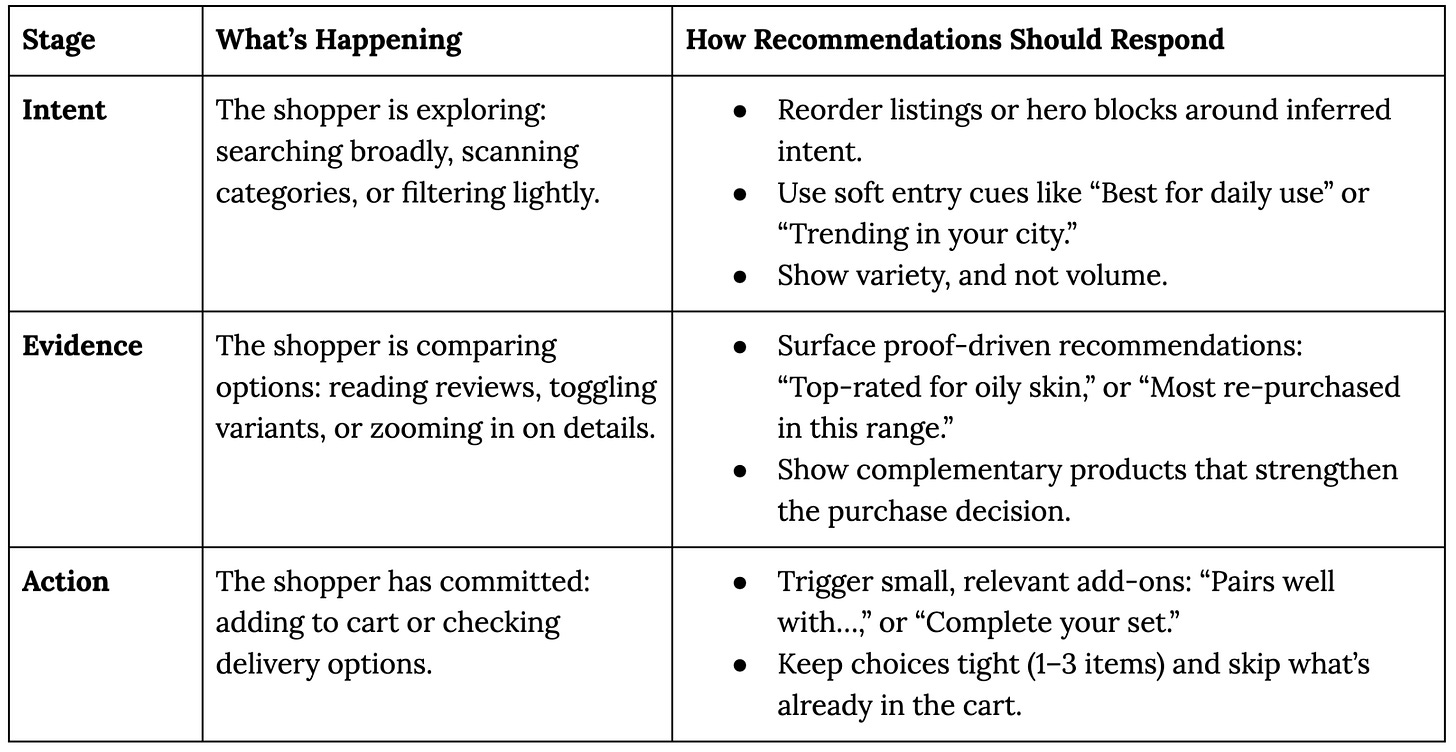

Framework: The “Intent → Evidence → Action” model

Every visitor moves through three invisible stages before buying: they look for something, they test what they’ve found, and they decide. Most recommendation engines ignore this rhythm—they show the same blocks to everyone, regardless of where that visitor is in their journey.

Here’s a framework to fix this:

Conclusion: Relevance is the new conversion strategy

Static recommendation systems mistake activity for intent. They show more options instead of sharper ones, and that’s exactly where conversions leak.

When your store reacts to intent in real time, every recommendation starts serving a purpose: it helps them move forward, not start over. That’s what modern product discovery looks like: fewer choices, sharper context, faster decisions.

Adaptive recommendation systems make that possible. They don’t rely on static rules or past data. Instead, they learn from what’s happening in the moment (how visitors scroll, what they compare, what slows them down) and adjust instantly.

Tools like Helium’s personalization engine re-rank products live, reading over twenty visitor signals per session—everything from scroll depth and variant toggles to city tier, device, and delivery context. The result is simple: shoppers find what fits faster, and stores convert more efficiently without adding friction or clutter.

Want to see how adaptive recommendations actually work in motion? Book a demo with Helium!